In April this year, a Tesla Model S in the United States hit and killed a man riding a motorcycle while using the FSD fully automatic driving function.

This is at least the second publicly reported FSD system-related fatality.

The driver admitted that he was looking at his phone while FSD was engaged. While Tesla has long emphasized that its "full self-driving" software requires constant driver supervision, such slogans do little to stop drivers who try to act recklessly.

The driver was eventually arrested for killing someone with his car based on his admission that he was not paying attention to the driving status while in autonomous mode and was completely trusting the computer to drive for him.

Although driver distraction is one of the causes of the accident, the ultimate conclusion is that Tesla's pure visual intelligent driving technology is unreliable, whether it is the FSD fully automatic driving system or the Autopilot assisted driving system.

Recently, The Wall Street Journal published an investigation into accidents involving Tesla's Autopilot system.

Since 2021, the National Highway Traffic Safety Administration (NHTSA) has investigated thousands of Tesla Autopilot collisions, but the results have never been made public because Tesla believes the data belongs to the company.

The Wall Street Journal conducted a comprehensive analysis of massive accident data, federal documents and local police records, and finally successfully deciphered 222 of the thousands of accidents, including 41 accidents where Autopilot suddenly turned, and 31 accidents where obstacles were not identified and direct collisions occurred. They concluded that the culprit behind Tesla's Autopilot accidents was the pure vision solution.

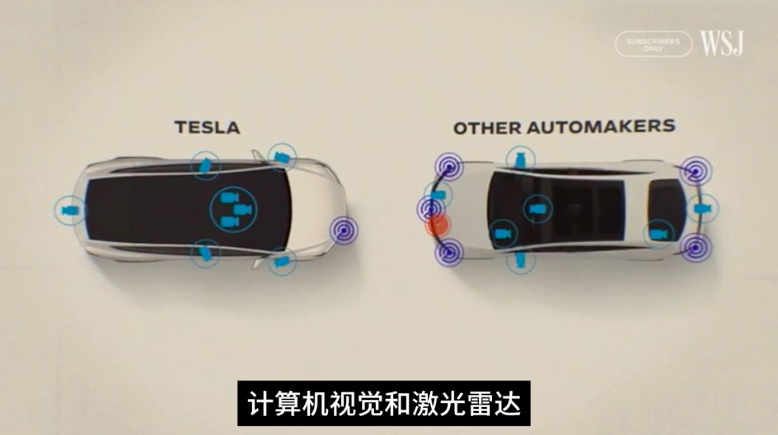

Missy Cummings, an autonomous driving expert at Duke University, said that Tesla Autopilot relies heavily on pure camera vision solutions, a choice that runs counter to the multi-sensor solutions (millimeter-wave radar + camera + lidar) of most mainstream automobile companies in the world. Tesla's approach is tantamount to exposing the public directly to the risk of car accidents.

Lidar, in particular, is a sensor that can help detect obstacles by measuring distance using lasers. Lidar used to be very expensive, costing tens of thousands of dollars per unit, which was one of the core reasons why Tesla gave up on the sensor. But now, the price of a Lidar has dropped to less than $500.

According to the investigation video released by the Wall Street Journal, Tesla's pure vision solution performs very unstable in actual application scenarios. Of the 12 car accident scenes released in the video, 8 occurred at night and 2 occurred in poor lighting conditions at dawn or dusk. Car accidents at night and in poor lighting accounted for as high as 83%.

Phil Kufman, an associate professor of electrical and computer engineering at Carnegie Mellon University, analyzed that Autopilot is prone to errors when it encounters situations it has not been trained for, such as an overturned truck.

The recognition of targets by visual algorithms based on task learning is based on a large number of cases. If there are no corresponding cases to train the algorithm, it will not be able to recognize obstacles ahead, even if those obstacles are obvious to humans.

In addition to being highly dependent on lighting conditions and requiring a large amount of case training, the Wall Street Journal also revealed another shortcoming of pure vision solutions: inconsistencies between multiple camera images can lead to recognition errors.

At night, a crashed pickup truck was visible to the naked eye blocking the road. One of Tesla's cameras could see it, but the other could not. As the distance to the obstacle got closer and closer, Autopilot was still in a state of confusion, and tragedy occurred.

Missy Cummings believes: "The pure vision solution is a technology with serious flaws. We still have a lot of work to do on how to fix the loopholes and how to make it recognize objects correctly."

Ray Diaz, a technician who has long repaired scrapped Teslas, told the Wall Street Journal: "Tesla claims that they provide very granular driving data, but this data must be disassembled and extracted from the vehicle's internal computer. In the video data I have seen, some drivers drove recklessly, and some I don't understand why they crashed, which makes it difficult to distinguish whether it is the driver's problem or the Autopilot problem."

Musk once said that Autopilot could prevent tens of millions of people from being seriously injured. But what he didn't say was that accidents became "training material" for the pure vision solution.

At present, domestic autonomous driving companies are roughly divided into the vision faction and the lidar faction. With the rise of the end-to-end concept, the vision faction seems to have the upper hand. As long as the computing power is large enough, the pure vision end-to-end solution can quickly learn to cover 95% of the scenes. It looks great, but the remaining 5% of uncovered scenes are various potential high-probability accident risks in the real car environment.

In fact, in communication with engineers from many domestic manufacturers, they generally believe that pure vision solutions cannot guarantee sufficient safety, and the role of sensors such as lidar should no longer be the "expensive appendix" in Musk's words, but a necessary component that cooperates with the vision system.

As the laser radar technology of Hesai Technology and RoboSense becomes more mature, the cost of a laser radar has dropped to 2,000 to 3,000 yuan, and BYD and Ecarx have even said that the cost of laser radar can be reduced to 1,000 yuan. For a car worth hundreds of thousands of yuan, this cost increase is nothing.

After all, the bottom line of intelligent driving is safety, not cheapness.